On This Page

TOS Aurora Architecture

Platforms

TufinOS Platforms

-

Tufin appliances, pre-installed with the TufinOS operating system and TOS Aurora

-

VMWare ESXi 6.5, 6.7, 7.0 or 8.0 (ESXi 8.0 requires TufinOS 4.20 or later) vSphere / vRA

Non-TufinOS Platforms

-

On-premise physical servers

-

VMware ESXi 6.5, 6.7, 7.0 or 8.0 (ESXi 8.0 requires TufinOS 4.20 or later) vSphere / vRA

-

Microsoft Azure Virtual Machines

-

Amazon AWS instances

-

Google Cloud Platform instances (for R22-2 PHF1.0.0 and later)

Operating systems

TOS Aurora can be deployed only on these operating systems:

-

TufinOS

-

CentOS 7.8 or 7.9

-

Red Hat Enterprise Linux 8.6, 8.8, or 8.9

- Red Hat Enterprise Linux 8.6, 8.8, or 8.9 (for R22-2 PHF1.0.0 and later)

- Rocky Linux 8.6, 8.8, or 8.9 (for R22-2 PHF1.0.0 and later)

Kubernetes

TOS Aurora is a container-based application that runs on Kubernetes (K3S distribution).

Clusters and Nodes

TOS Aurora is initially installed on a single server (or ' node') and we refer to this node as the primary data node.

Additional nodes can be added to the cluster to support larger workloads or to enable high availability, but even if you have a single node, it is considered a cluster.

There are two types of node - worker nodes that perform compute operations and data nodes that perform data storage and handling operations. The primary data node is a data node that also has a system management role.

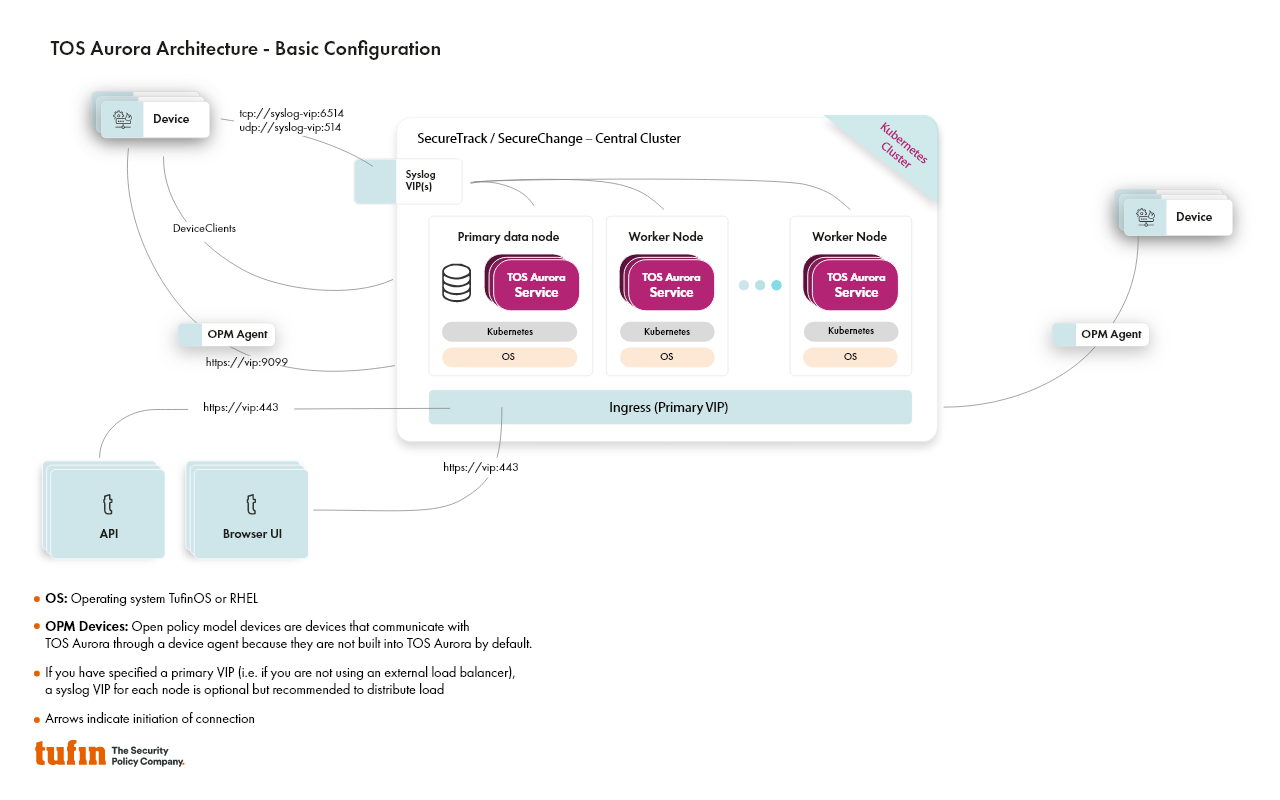

Basic Configuration

In its most basic configuration, TOS Aurora consists of a single cluster (the central cluster) containing a single node (the primary data node). Worker nodes can be added to increase computing power, depending on the load your system will need to handle.

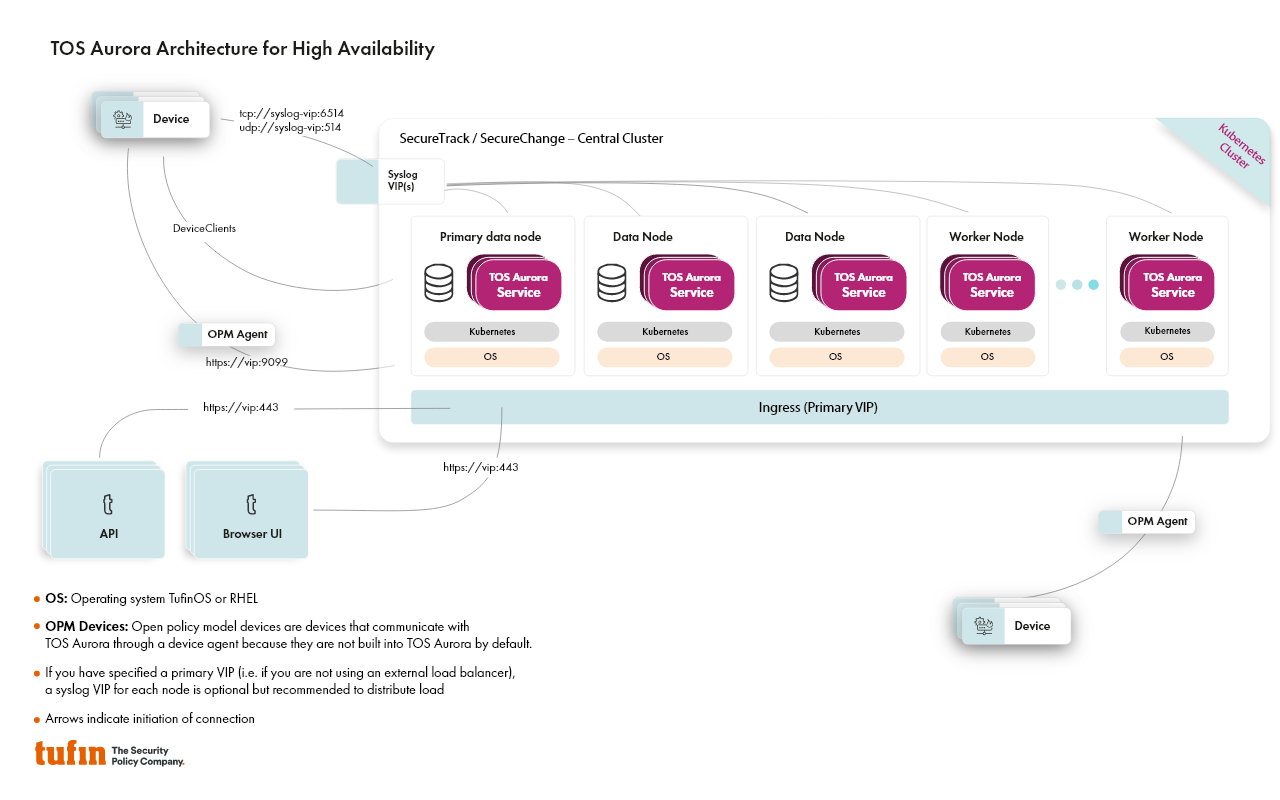

High Availability Configuration

For high availability, two additional data nodes are required in the cluster - see high availability. As in the basic configuration, worker nodes can be added as needed.

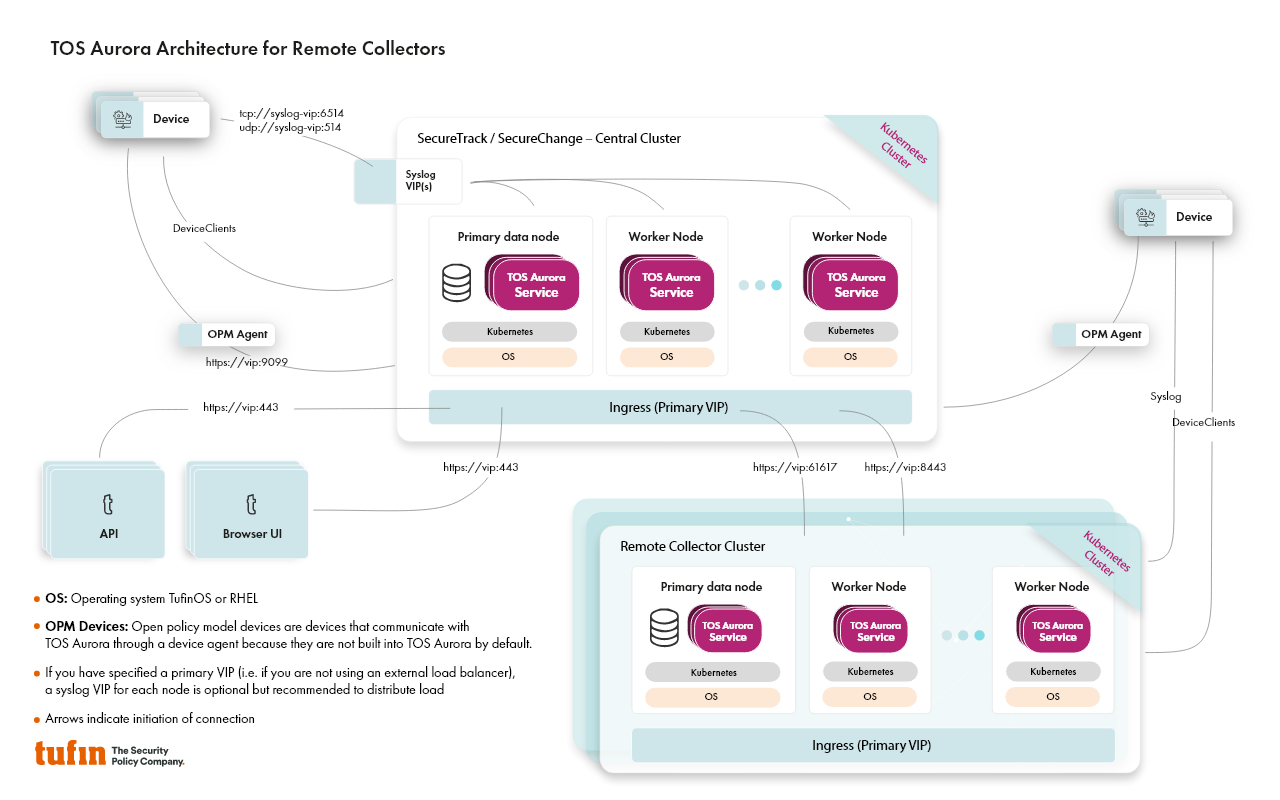

Remote Collector Configuration

I-O efficiency can be improved by using additional remote collector clusters whose primary purpose is to interact with monitored devices. As in the basic configuration, worker nodes can be added to remote collector clusters, as needed. Remote collectors cannot be run under high availability, however they can be connected to a central cluster that is running under high availability.

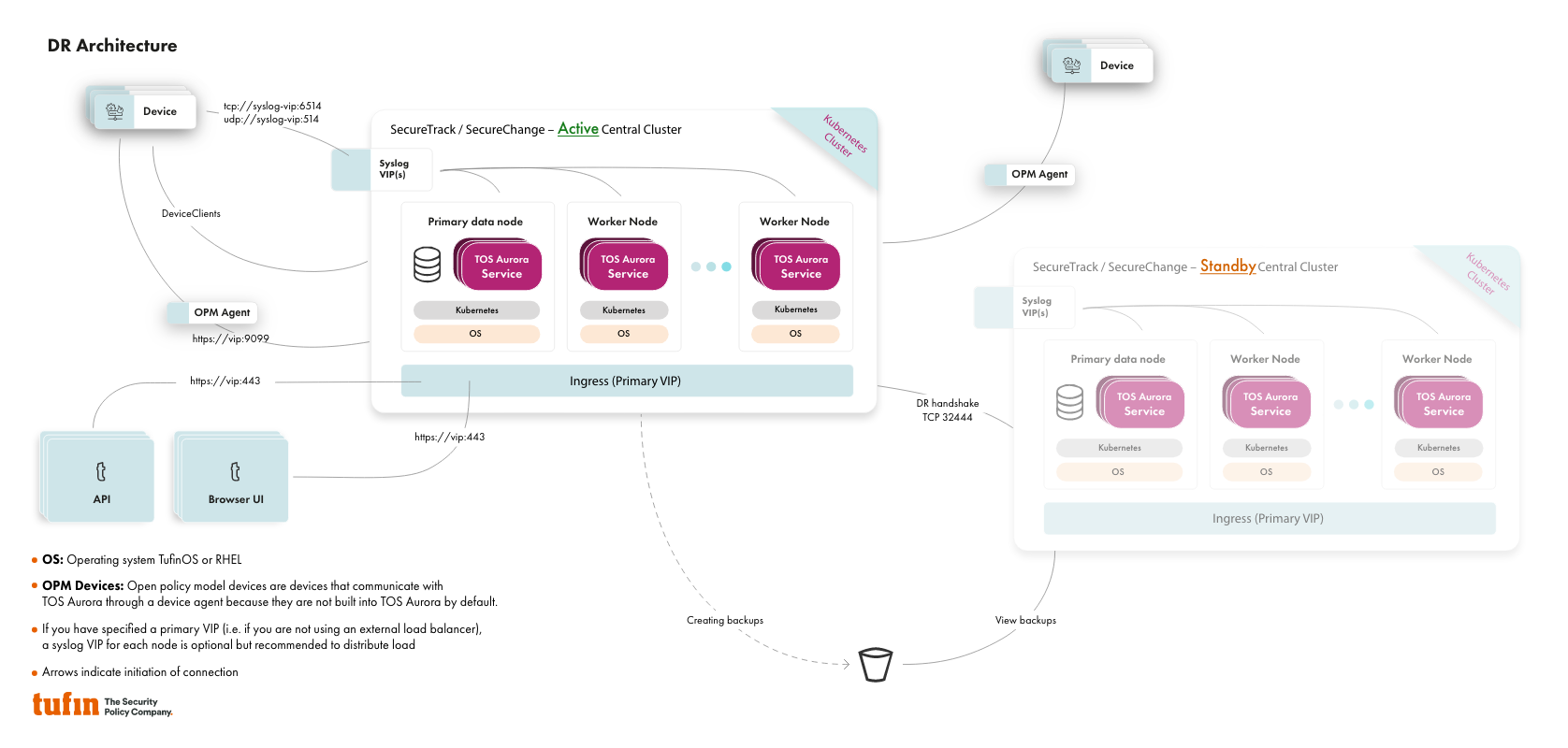

Disaster Recovery

For disaster recovery, you need to have two Tufin Orchestration Suite (TOS) clusters connected to the same external backup storage. After this connection is complete, set up the disaster recovery feature.