On This Page

Disaster Recovery

Overview

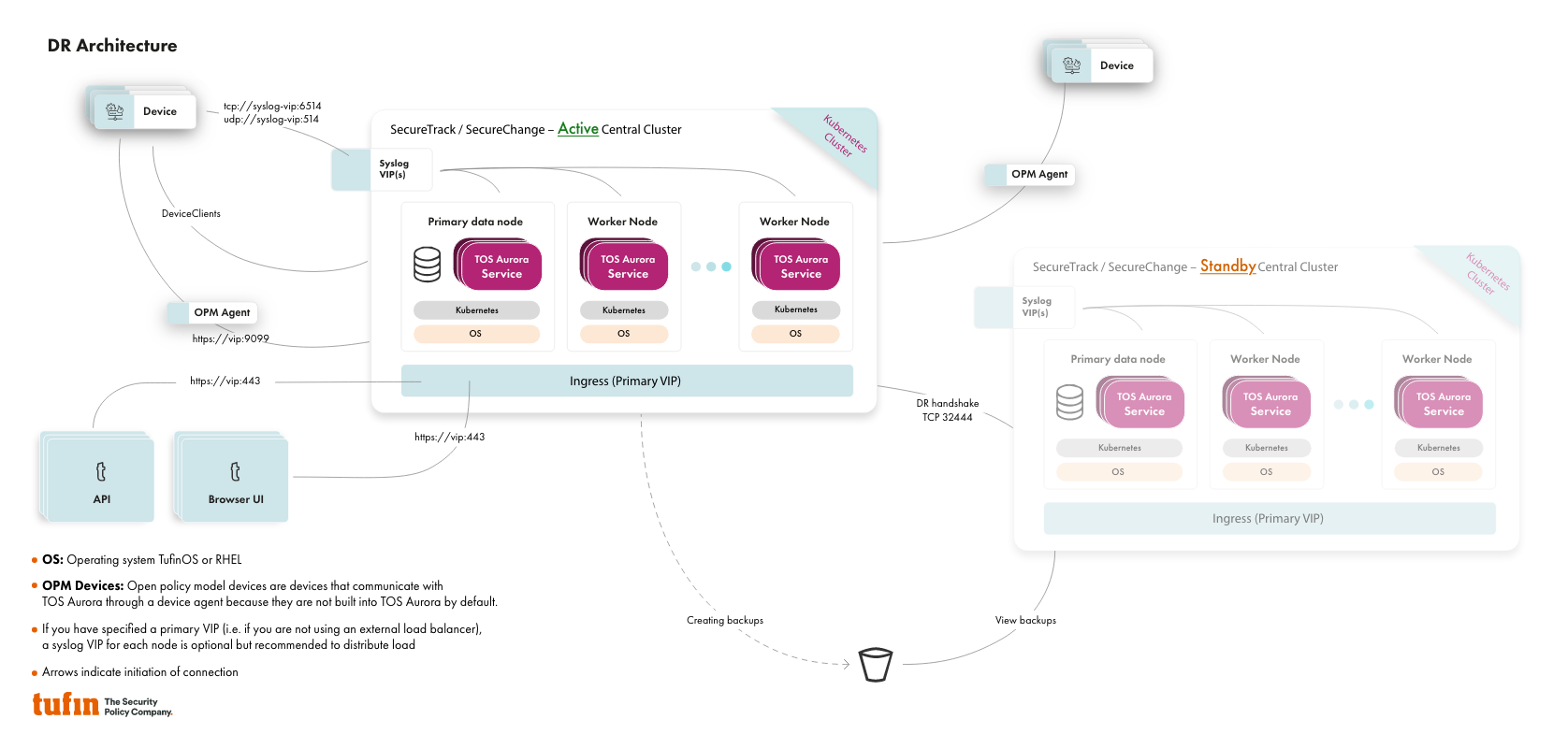

Tufin Orchestration Suite (TOS) disaster recovery (DR) can be implemented to create redundancy across sites. It is a manual failover process that invokes an automatic restore procedure when the standby cluster is brought into operation. When the switchover between the two clusters is made, a function is invoked that restores data from latest backup to the new active cluster.

DR is meant for redundancy, it is not a high availability (HA) solution. HA must be deployed where all data nodes are located on the same site, therefore if you want both HA and site redundancy, you must deploy HA separately on each cluster.

Some degree of data loss will be entailed when making switchover, due to the time between completing the last backup on the previous active cluster and completing the restore to the new active cluster.

DR Architecture

For DR, you need two Tufin Orchestration Suite (TOS) clusters connected to the same external backup storage. DR is initiated on the cluster you designate as the active cluster. The second cluster then becomes standby cluster by default.

Both clusters must be up and running; however, the standby cluster will only have a limited number of services running and will not be able to receive requests.

Prerequisites

-

Requires a High Availability (HA) license.

- Confirm that all required ports are open, see Central Cluster Ports.

-

Two clusters with the same configuration:

- If you configure HA on one cluster, you must configure HA also on the second cluster.

-

Both clusters must have the same modules (SecureTrack only or SecureTrack and SecureChange).

-

Both clusters must meet the prerequisites listed in the installation procedure.

-

Connect both clusters to the same external backup storage location. Local storage locations are not supported.

-

Allow IP connectivity between the two clusters. Network Address Translation (NAT) is not supported.

-

Set the cluster VIP to a local address to avoid overwriting it when restoring a backup.

Setup and Initialization

Initializing Disaster Recovery

Run tos dr init on the cluster that will initially be designated the active cluster.

Description

Initializes DR on a cluster and makes it the active cluster. The backup destination must be set to external storage before running. The command also sets the backup policy in the same way as sudo tos backup policy set. Disaster recovery uses the UTC time zone.

Syntax

[<ADMIN> ~]$ sudo tos dr init [-n <NAME>][--rate <RATE>][--hour <HOUR>][--minute <MINUTE>]Parameters

|

Parameter |

Description |

Required/Optional |

Possible Values |

|---|---|---|---|

|

|

Use this if you want to provide a name to help identify this cluster. If you don't use it, the hostname of the primary node will be used. |

Optional |

Standard name convention |

|

|

Backup frequency (in hours). |

Optional |

24 (default), 12, 8, 6 |

|

|

Hour when the first daily backup occurs. Format is HH. |

Optional |

Default: 00 |

|

|

Minute of the hour when the first daily backup occurs. Format is mm. |

Optional |

Default: 00 |

Example

$ sudo tos dr init [Jul 18 10:38:34] INFO DR initialization finished successfully

Generating an Authentication Token

Run this command on the current active cluster. It will generate a token that must then be used when running the tos cluster connect command on the standby server.

Description

Generates a unique token that is used to authenticate the connection between the two clusters.

Syntax

Example

$ sudo tos dr generate-token [Jul 18 10:38:45] INFO Please save the token and use it when running the connect command from remote peer Token: z1obGYNhdcb85rsDI7IrygGfMP5rHFq50iygPcEWxnE=

Connecting the Clusters

Run this command on the standby cluster.

Description

Connects the standby cluster with the active cluster. After the connection is complete, most of the services in the standby cluster will be shutdown.

Syntax

[<ADMIN> ~]$ sudo tos dr connect [-n <name>][-p <IP_ADDRESS>][-t <TOKEN>]Parameters

|

Parameter |

Description |

Required/Optional |

Possible Values |

|---|---|---|---|

|

|

Use this to provide a name to help identify this cluster in the tos dr status command. If you don't use it, the hostname of the primary node will be used. |

If you used the -n or --name parameter previously when running tos dr init on the active cluster, you must use the -n or --name parameter here as well, specifying a different name to represent the standby cluster. |

Standard name convention |

|

|

Network IP address of the active cluster primary node (not the VIP) |

Required |

Actual machine IP address only |

|

|

Token generated |

Required |

Value generated from tos dr generate-token |

Example

$ sudo tos dr connect -p 192.168.32.23 -t Zbk7lwH4Qu7rINz8DvuwjQgJgpWjcsgtxKe3h90= [Jul 18 10:39:07] INFO Local cluster state is "StandBy"

Show the Status of the Clusters

Description

Display DR status for active and standby peers. The standby cluster can view the backups taken on the active cluster .

Syntax

Example

$ sudo tos dr status DR configuration: Backup policy rate: 24H Hour: 0:0h Last compatible healthy backup Timestamp: 2023-07-10 06:10:40 +0000 UTC Local cluster status: Name: local State: Active, Ready Last Update: 2023-07-10 09:33:45 +0300 IDT Version: 23.2.1100-20230709122412+23-2-pga.0.0 HA: false Modules: ST, SC Peer cluster status: Name: remote State: StandBy, Ready Last Update: 2022-07-26 13:53:22 +0300 IDT Version: 23.2.1100-20230709122412+23-2-pga.0.0 HA: false Modules: ST, SC

Switch Clusters

Run this command on the standby cluster to switch the two clusters - the standby cluster becomes the active cluster.

During the switch process, if the active cluster is still running, it is notified that a switch is in progress and all the services of that cluster will be shut down. The data on the new active cluster will be automatically restored from the most recent backup. You can optionally specify a different backup to use.

After the switch, you must make the necessary DNS changes to ensure that requests sent to the VIPs of the previous active cluster are redirected to the new active cluster.

Description

Switches activity between peer clusters.

Syntax

Parameters

|

Parameter |

Description |

Required/Optional |

Possible Values |

|---|---|---|---|

|

|

Backup to be used on switch. |

Optional |

Default: latest completed backup will be used |

|

|

Bypass confirmation |

Optional |

|

Example

$ sudo tos dr switch [Jul 18 10:46:55] INFO Switching current peer to Active [Jul 18 10:46:55] INFO Setting peer to "Switch" mode

Disable Disaster Recovery

Description

Disables DR on the cluster.