On This Page

AI Assistant Search Architecture

Overview

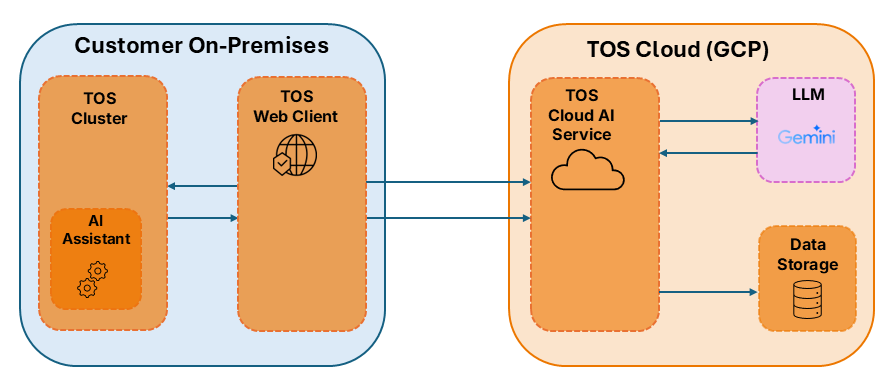

AI Assistant Search architecture integrates on‑premises and cloud components to provide secure, intelligent natural‑language search and TQL (Tufin Query Language) generation.

Architecture

The diagram illustrates the architecture of the AI Assistant Search Service. Licenses are validated and users are authenticated on-premises, while AI processing occurs in the Tufin Cloud.

On-Premises Components

AI Assistant Search Service

The AI Assistant Search Service is a local on‑premises service that verifies the license status to ensure that users are authorized to use the AI capabilities, and manages user authentication.

TOS Web Client

The TOS Web Client is the browser user interface integrated with the Tufin Orchestration Suite (TOS) where users submit natural language queries for AI processing. The TOS Web Client handles all interactions between the user and the cloud to ensure protected and compliant data exchange.

Cloud Components

TOS Cloud AI Service

The TOS Cloud AI Service is the central cloud-based component responsible for processing natural language queries. It functions as a secure, isolated service that never communicates directly with the on-premises environment.

LLM

The Gemini Flash 2.5 Large Language Model (LLM), powering natural language understanding and query conversion to Tufin Query Language (TQL).

Data Storage Service

The Data Storage service securely stores natural language and TQL queries generated, and feedback submitted by the user. Sensitive data fields are automatically masked and encrypted to maintain customer data privacy.

How Does AI Assistant Search Work?

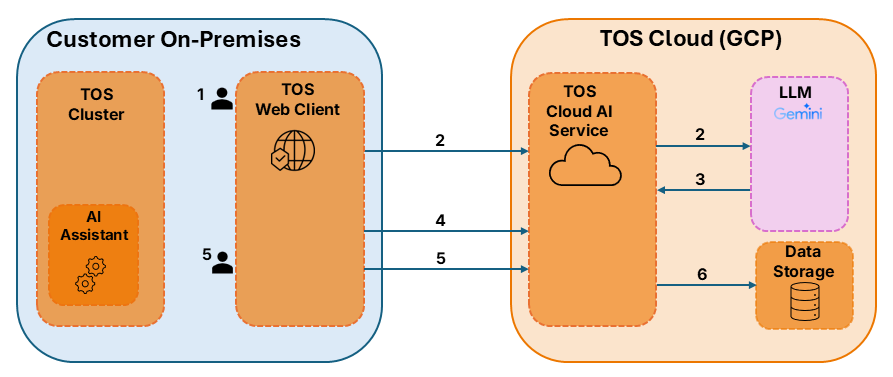

The diagram below illustrates the high-level data flow of the AI Assistant Search process when the user submits a natural language query. Each numbered arrow corresponds to a step in the sequence described in the list that follows.

-

The user enters a natural language query in the TOS Web Client.

-

The TOS Web Client securely sends the natural language query to the TOS Cloud AI Service, which forwards it to the LLM for processing.

-

The LLM (Gemini Flash 2.5):

-

Interprets the natural language input

-

Generates the equivalent TQL query

- Forwards the TQL query to the TOS Cloud AI Service

-

-

The TOS Web Client:

-

Pulls the results from the TOS Cloud AI Service

- Displays the natural language query, the TQL query, and the search results to the user

-

-

The user submits feedback, and the TOS Web Client forwards it to the TOS Cloud AI Service.

-

The TOS Cloud AI Service masks sensitive values, and saves the natural language query, the TQL query, and feedback, in the database.

Was this helpful?

Thank you!

We’d love your feedback

We really appreciate your feedback

Send this page to a colleague